Study finds AI symptom checkers could dangerously mislead patients

Amelia Jones

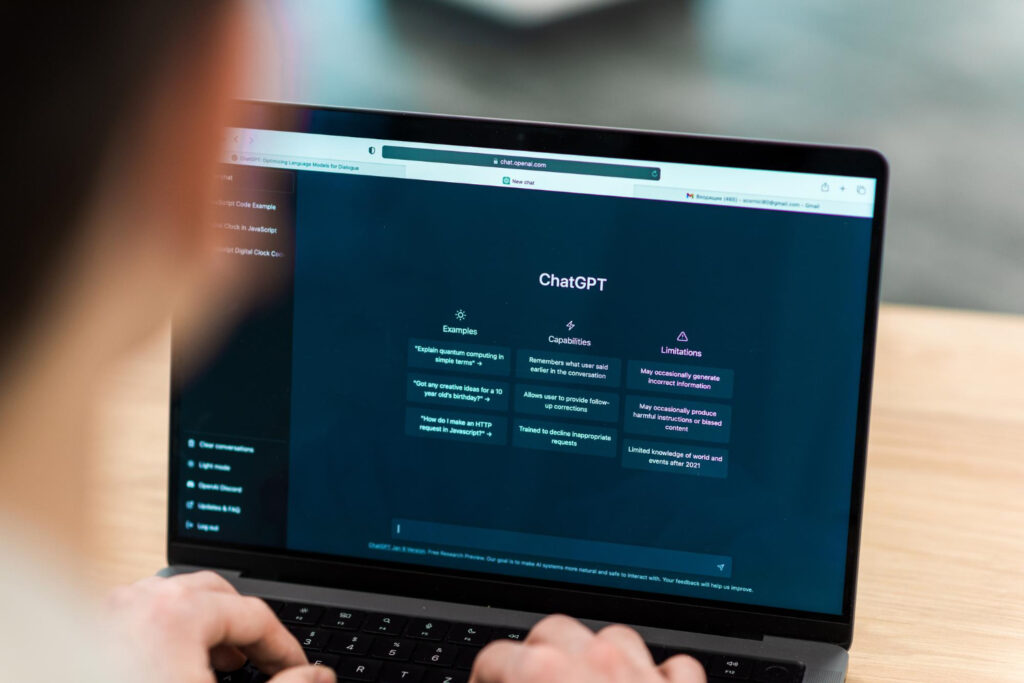

A new study has found that AI chatbots pose risks to users seeking help identifying their medical symptoms.

Large language models (LLMs) such as AI chatbots are often promoted as a way to make healthcare information more accessible, particularly for people who may struggle to get timely medical advice.

But new research suggests that while these systems may perform well in theory, they can fall short or become dangerous when people use them to make decisions about their health.

The study, published in Nature Medicine, was carried out by researchers from the University of Oxford’s Internet Institute and Nuffield Department of Primary Care Health Studies, in partnership with MLCommons, Bangor University’s North Wales Medical School and other institutions.

It found a significant gap between how well AI models perform on standard medical knowledge tests and how useful they are for individuals seeking guidance about symptoms they are experiencing.

To assess this, researchers ran a randomised trial involving nearly 1,300 online participants.

Each participant was presented with detailed medical scenarios developed by doctors, range from a young man developing a severe headache after a night out to a new mother experiencing persistent breathlessness and exhaustion.

One group used an LLM to assist their decision-making, while a control group used other traditional sources of information.

The researchers then evaluated how accurately participants identified the likely medical issues and the most appropriate next step, such as visiting a GP or going to A&E.

They also compared these outcomes to the results of standard LLM testing strategies, which do not involve real human users.

Key problems

A key problem identified by researchers was a breakdown in communication between users and the AI systems. Participants often struggled to know what information to provide to get accurate advice. At the same time, the responses they received frequently blended sound medical guidance with poor or misleading recommendations, leaving users confused.

The study also found that small changes in how questions were phrased could lead to very different answers from the same model.

Researchers said this inconsistency presents a particular risk in health-related contexts, where recognising red flags and knowing when to seek urgent care can be critical. When users lacked medical training, distinguishing between safe and unsafe advice is even more challenging.

Dr Rebecca Payne, GP, lead medical practitioner on the study, Clinical Senior Lecturer, Bangor University and Clarendon-Reuben Scholar, Nuffield Department of Primary Care Health Sciences said: “These findings highlight the difficulty of building AI systems that can genuinely support people in sensitive, high-stakes areas like health.

“Despite all the hype, AI just isn’t ready to take on the role of the physician. Patients need to be aware that asking a large language model about their symptoms can be dangerous, giving wrong diagnoses and failing to recognise when urgent help is needed.”

Doctoral researcher at the Oxford Internet Institute, Andrew Bean said: “Designing robust testing for large language models is key to understanding how we can make use of this new technology,”

“In this study, we show that interacting with humans poses a challenge even for top LLMs. We hope this work will contribute to the development of safer and more useful AI systems.”

As AI tools become increasingly embedded in everyday life, the findings serve as a caution against overconfidence in their abilities, particularly in areas where mistakes can have serious consequences.

While chatbots may be useful for general information or administrative tasks, this study suggests they are not yet a safe substitute for professional medical judgement.

Support our Nation today

For the price of a cup of coffee a month you can help us create an independent, not-for-profit, national news service for the people of Wales, by the people of Wales.

The same difficulties are encountered by qualified staff especially when not in a face to face consultation when all your senses see important. AI will be useful first in conjunction with a clinician in face to face consultation to distinguish the hoofbeats of horses from zebras as the adage has it about distinguishing the rare from the commonplace.

I understand the concerns raised in this article but I’m afraid to say that ChatGPT has provided me with much more valuable information than my GP did, and it even has better manners. Used with common sense and an appreciation of its limits it can be a great tool, especially with the state of the health service as it is.

We have found WI Chatgpt amazing. It highlighted very basic tests that NHS weren’t doing because of costs. Many people go undiagnosed because NHS have to keep to the NHS pathway and if you don’t tick that box you don’t get diagnosed.

I would rather trust AI than my doctor